Expert Network Map

Introduction

While enrolled in Fab Academy, I could easily identify experts within the Charlotte Fab Lab when I faced technical roadblocks. However, subject area experts were difficult to locate beyond my local lab, and vast amounts of helpful documentation shared by the global Fab community were hard to find. To resolve this problem, I created the Expert Network Map, a software project consisting of two components: a network analysis of the Fab Academy community and an interactive visualization of the network.

I executed the project in three distinct phases: I wrote a script to collect the data, designed a text-classification AI model to sort links by subject area for analysis, and utilized D3JS to present the findings in an interactive visualization.

Here is a link to the Expert Network Map! (be sure to use Chrome on a computer)

In July 2023, I virtually presented the Expert Network Map at the International Fab Lab Conference and Symposium, the largest digital fabrication event in the world. The Expert Network Map is live and used by over 500 students. Find my presentation below.

For a more detailed description of the project, please read below.

Thank you very much to Francisco Sanchez Arroyo for all of his guidance and help, as well as to Nadieh Bremer for her advice on visualization.

Project Description

Background

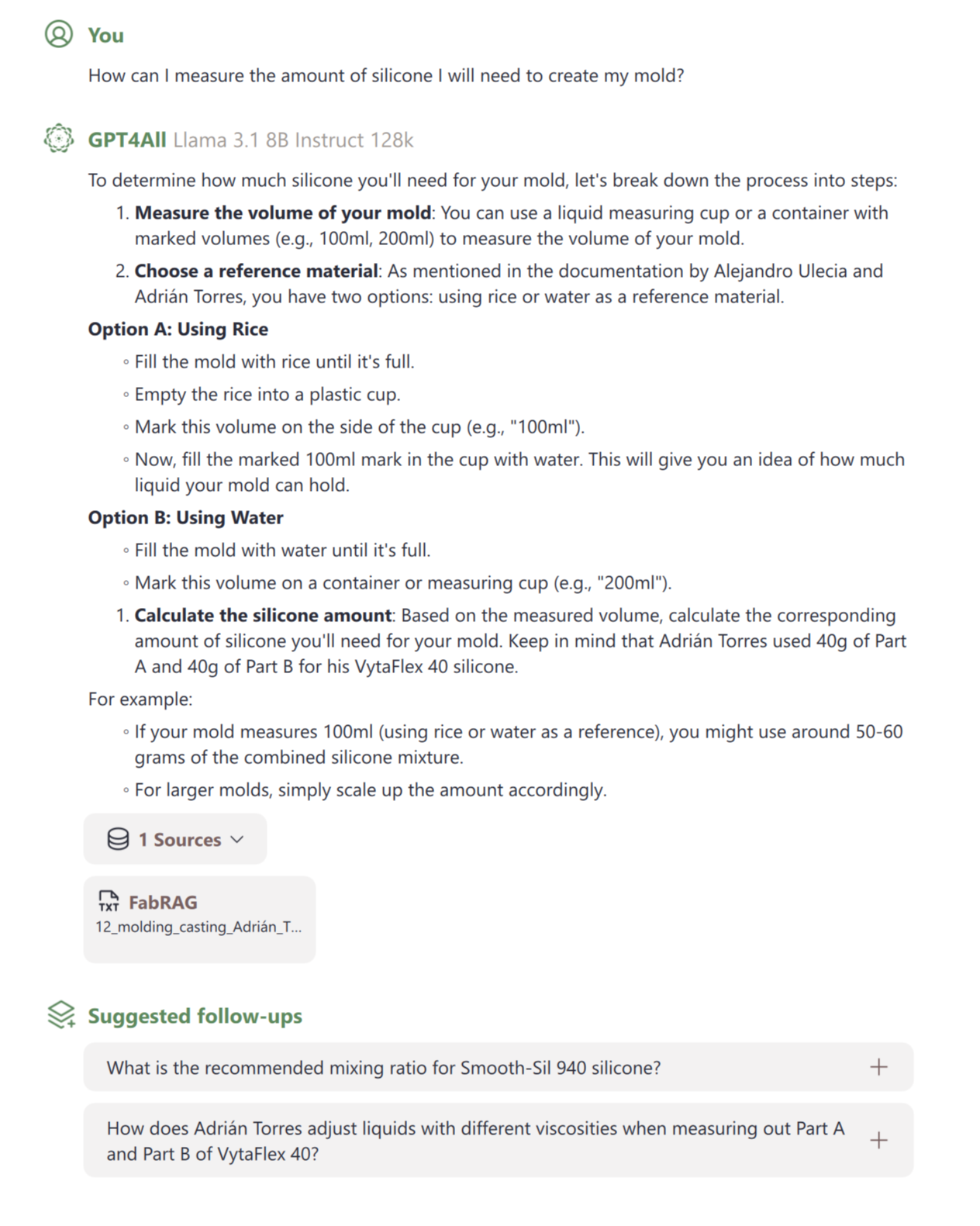

The Expert Network Map is an analysis of 1,000+ members of the Fab Academy community to locate hidden expertise in 17 subject areas and an interactive visualization of the network. Fab Academy is a digital fabrication course focused on rapid-prototyping led by MIT Professor Neil Gershenfeld. All Fab Academy students keep a documentation website detailing the process of completing projects, and students are encouraged to seek out others’ documentation to navigate technical roadblocks. For an example of a documentation website, see mine here. To give credit to content referenced, students add links in their own websites to the documentation websites they found helpful. See the box below for an example in my documentation website where I link to Nidhie Dhiman's documentation to give her credit.

Problem, Hypothesis, and Prototype

While I knew who had helpful documentation in my Charlotte Fab community, with over 1,000 documentation websites globally, there was a lot of untapped expertise I couldn’t locate. I recognized that if expert documentation could quickly be identified, all students would be able to work more efficiently.

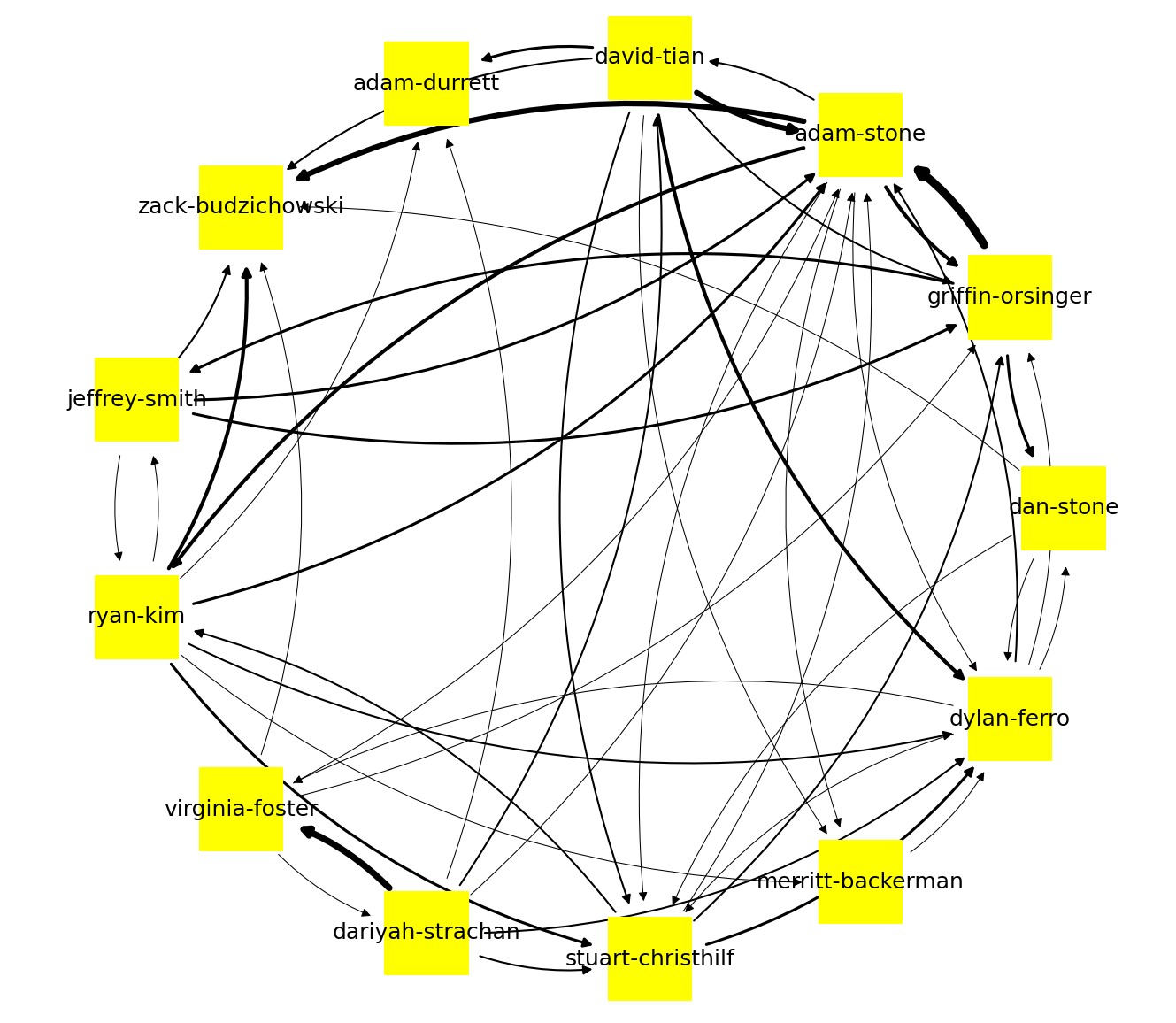

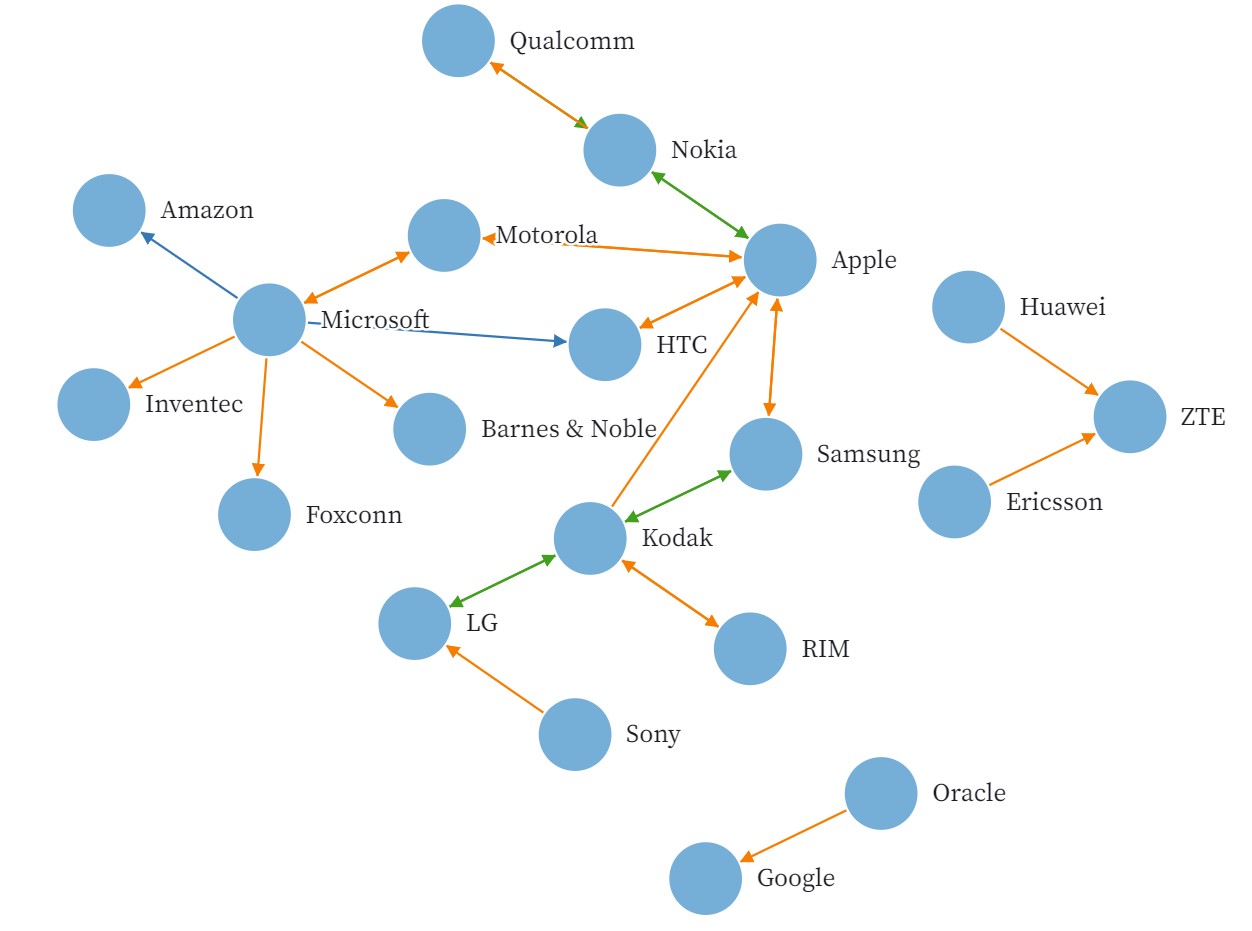

I hypothesized that the more times students’ documentation websites were referenced by their peers in a subject area, the more expertise they possessed. As a test, I ran a network analysis of the 13 students in the Charlotte Fab community, quantifying the number of times each student’s documentation website was referenced by their peers. The analysis yielded promising results: some students had significantly more references than the rest of the peer group, and student were frequently linking each other's websites.

Read more about my initial prototype here: 1st iteration and 2nd iteration.

Pitch

I showed the results to my local lab leader, Mr. Dubick, who suggested that I talk to Professor Gershenfeld and propose analyzing the global Fab community network to identify experts by topic. Professor Gershenfeld believed the network analysis would be incredibly valuable and connected me with a mentor in Spain, Francisco Sanchez Arroyo, who advised me on the best tools and structure to use.

Project Execution

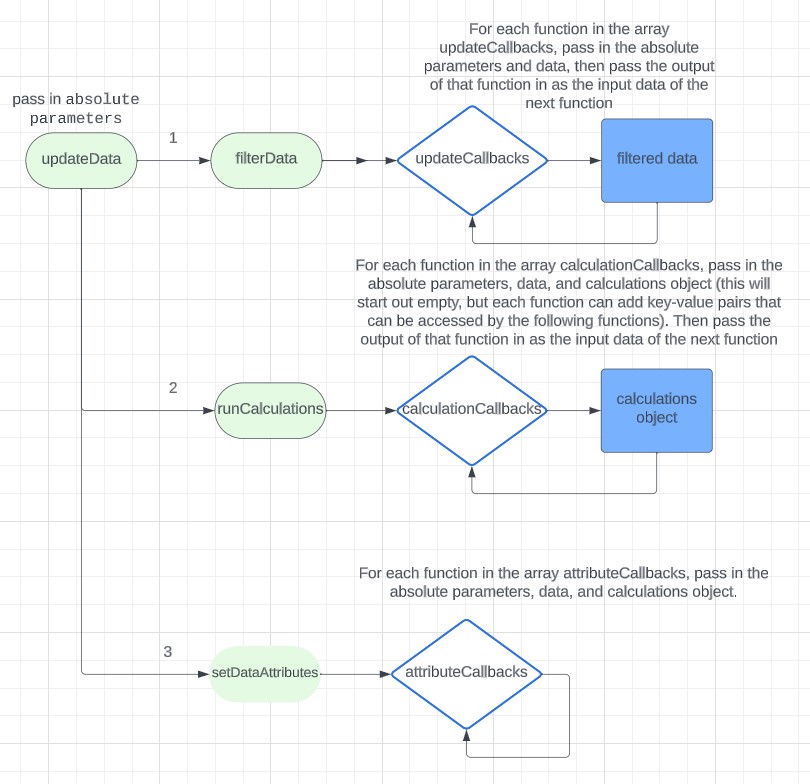

I executed the project in three distinct phases: I wrote a script to collect the data, designed a text-classification AI model to sort links by subject area, and utilized D3JS to present the data in an interactive visualization.

Step 1: Data Collection

For the first step, I wrote a Python script to scrape all Fab Academy students’ documentation website GitLab repos over the past six years using the Python-GitLab API and scan for URL references to other student websites using RegEx. I also stored 1,000 characters before and after to use for classification and used Pandas to create a reference matrix that structured the data for analysis. My program yielded a database of ~29,000 references.

Step 2: AI, Sorting Data & Analysis

After the data were collected, I knew I had a significant challenge. For the network analysis to be useful, I needed to calculate the number of times a student’s documentation was referenced for a specific subject area. I had collected tens of thousands of pages of documentation text that used vastly different naming conventions for each subject area (for example, "3D Scanning & Printing" vs "3d.printing.and.scanning"), and much of the text understandably had spelling errors given that many Fab Academy students do not speak English as a first language. To overcome the challenge of categorizing references by subject area with no consistent naming convention, I created a text-classification neural network. Using a list of keywords that were often associated with a Fab Academy subject-area, for example "PLA filament" for "3D Printing," I was able to classify ~13,000 of the ~29,000 references based on the surrounding text. Find a JSON file of the keywords I used for each subject area below.

{

"Prefab": [],

"Computer-Aided Design": [

"Computer-Aided Design",

"freecad"

],

"Computer-Controlled Cutting": [

"Laser Cut",

"CCC week",

"Computer-Controlled Cutting"

],

"Embedded Programing": [

"Embedded Programming",

"MicroPython",

"C++",

"pythoncpp",

"ino",

"Arduino IDE",

"programming week"

],

"3D Scanning and Printing": [

"3D Printing",

"3D Scanning",

"TPU",

"PETG",

"filament",

"Prusa",

"3d printing week",

"polyCAM",

"3D Scanning and Printing",

"3d printers",

"3d printer"

],

"Electronics Design": [

"EagleCAD",

"Eagle",

"KiCAD",

"Routing",

"Auto-Route",

"Trace",

"Footprint",

"electronic design",

"Electronics Design"

],

"Computer-Controlled Machining": [

"CNC",

"Shopbot",

"Computer-Controlled Machining"

],

"Electronics Production": [

"Mill",

"Milling",

"copper",

"electronic production",

"Electronics Production"

],

"Mechanical Design, Machine Design": [

"Machine week",

"actuation and automation",

"Mechanical Design, Machine Design"

],

"Input Devices": [

"Input Devices",

"Input Device",

"Inputs Devices",

"Electronic input",

"sensor",

"Input Devices"

],

"Moulding and Casting": [

"Part A",

"Part B",

"pot time",

"pottime",

"molding",

"moulding",

"casting",

"cast",

"Moulding and Casting"

],

"Output Devices": [

"Output Device",

"Outputs Devices",

"Outputs Device",

"Servo",

"motor",

"Output Devices"

],

"Embedded Networking and Communications": [

"SPI",

"UART",

"I2C",

"RX",

"TX",

"SCL",

"networking week",

"networking",

"network",

"networking and communications",

"Embedded Networking and Communications"

],

"Interface and Application Programming": [

"Interfacing Week",

"interface week",

"Interface and Application Programming"

],

"Wildcard Week": [

"Wildcard Week"

],

"Applications and Implications": [

"Applications and Implications",

"Bill-of-Materials",

"Bill of materials"

],

"Invention, Intellectual Property and Business Models": [

"Patent",

"copyright",

"trademark",

"Invention, Intellectual Property and Business Models"

],

"Final Project": [

"Final Project"

],

"Other": []

}

To classify the remaining ~19,500 references, I trained my own text-classification neural network off of the 1,000 characters before and after each of the ~13,000 already classified references using Python and PyTorch. I designed the neural network with 5,000 input nodes, a hidden layer of 200 nodes, and 18 output nodes (one for each Fab Academy subject area). To format the data for the neural network, I used the Sci-Kit Learn CountVectorizer to tokenize the text surrounding each URL, and the resulting matrix was converted to a NumPy array and then to a PyTorch tensor. To train the neural network, I implemented Cross-Entropy Loss as the loss function to measure the model's performance in predicting the correct subject-area, and I employed the Adam optimizer to adjust the model's weights. After hyperparameter tuning using a grid-search algorithm, the model achieved its best accuracy of 86.3% using a learning rate of 0.01 and dropout rate of 0.1. You can see the gridsearch algorithm below.

# Define grid of hyperparameters

learning_rates = [0.1, 0.01, 0.001]

dropout_rates = [0, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9]

# Perform the grid search

best_accuracy = 0.0

best_lr = None

best_dropout_rate = None

best_model = None

for lr in learning_rates:

for dropout_rate in dropout_rates:

# train_model is defined earlier

accuracy, model = train_model(lr, dropout_rate)

print(f'Learning rate: {lr}, Dropout rate: {dropout_rate}, Accuracy: {accuracy}')

if accuracy > best_accuracy:

best_accuracy = accuracy

best_lr = lr

best_dropout_rate = dropout_rate

best_model = model

The model successfully classified the remaining ~19,500 references.

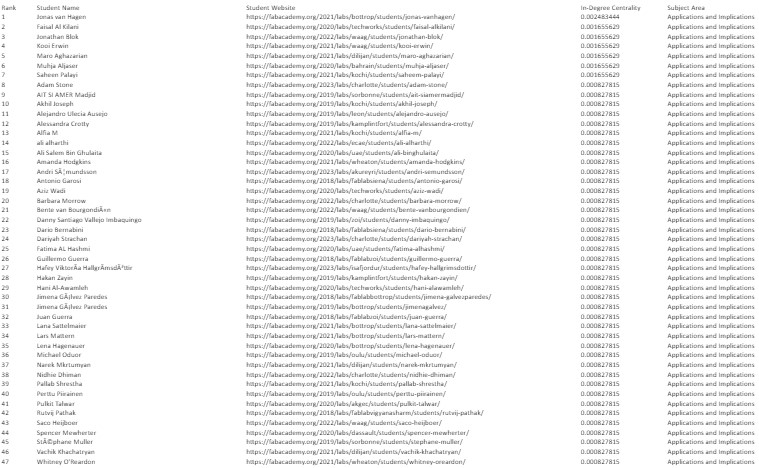

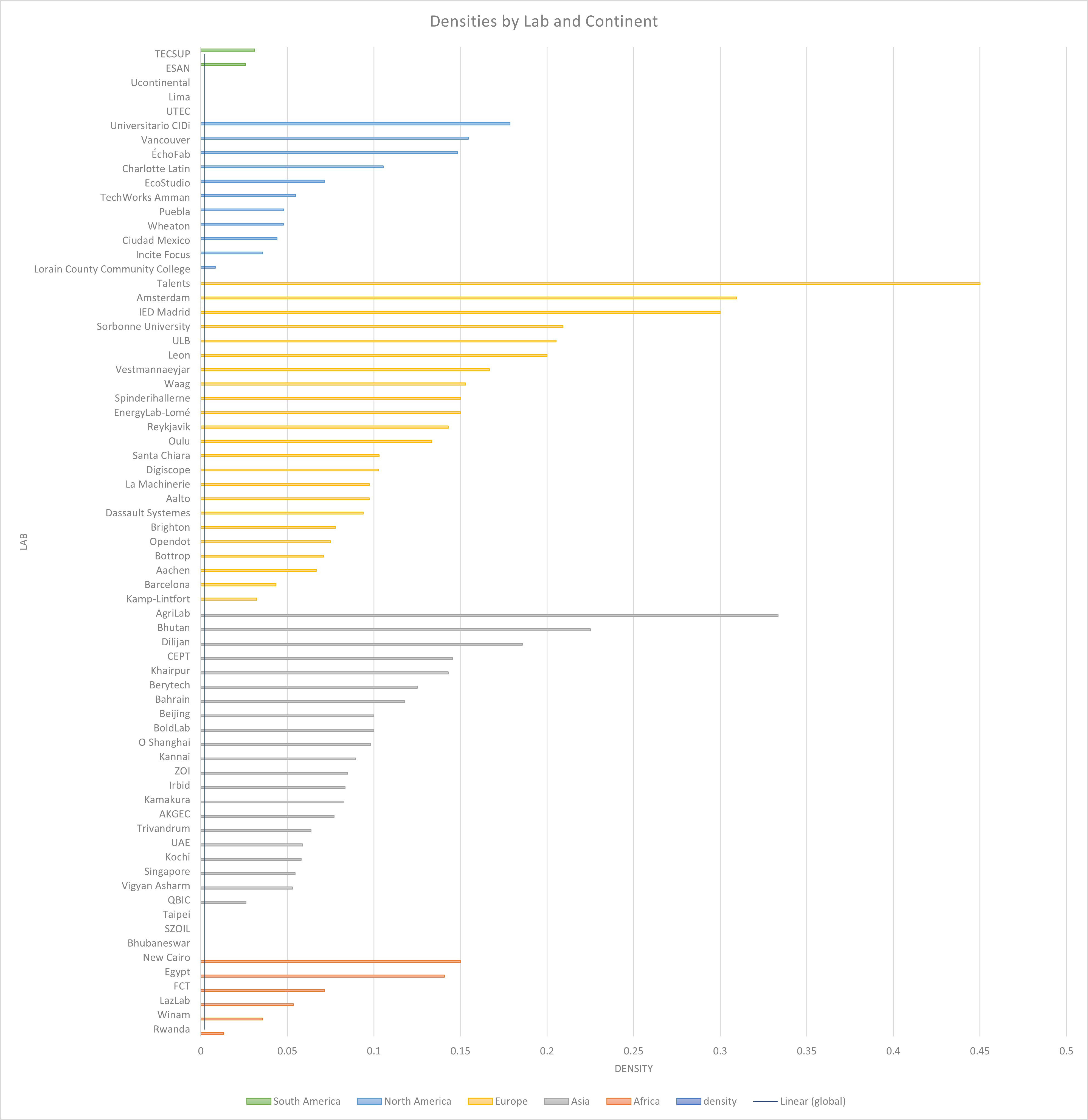

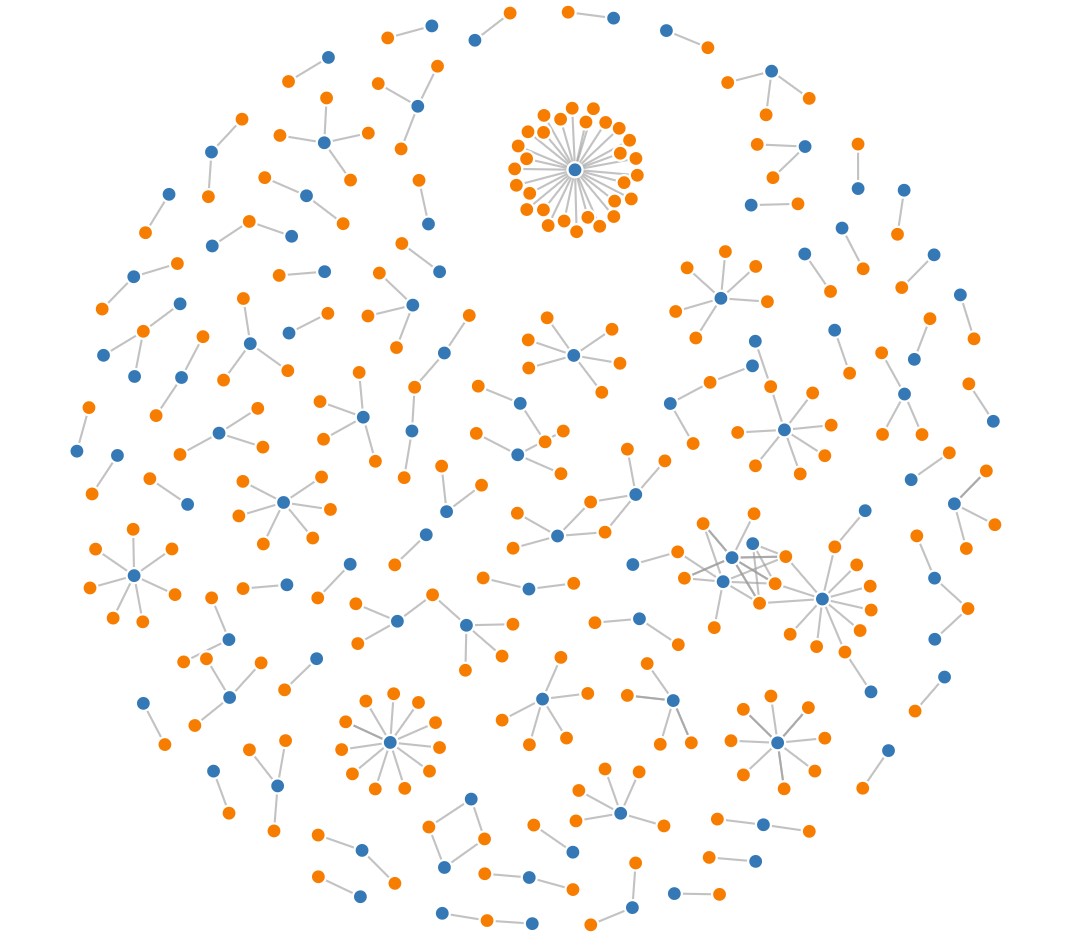

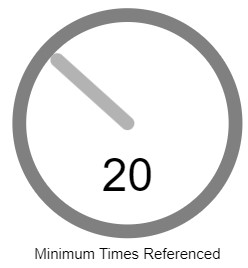

After the data were collected, I ran a network density analysis for the global community, as well as each individual lab that had more than five members. The global network density was ~0.0024, while ~91.3% of the individual labs had a higher density than this, ranging from approximately 0.03 to 0.45 (with the exception of the Rwanda network density of ~0.013), supporting my hypothesis that students frequently connected with experts in their own lab, but not in the global community. Next, for the network analysis to be useful, I calculated each student's in-degree centrality by subject area. Read more about this process below.

Step 3: Data Visualization

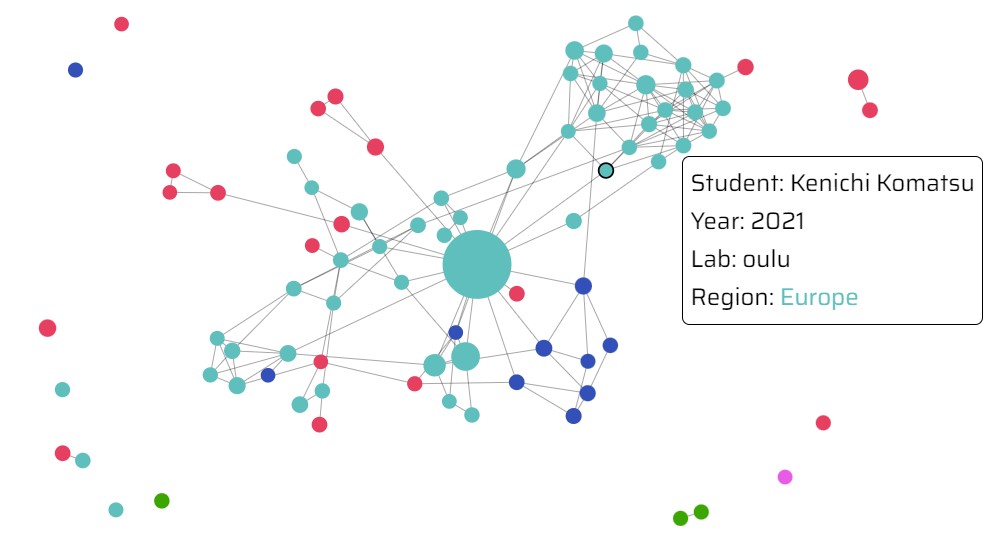

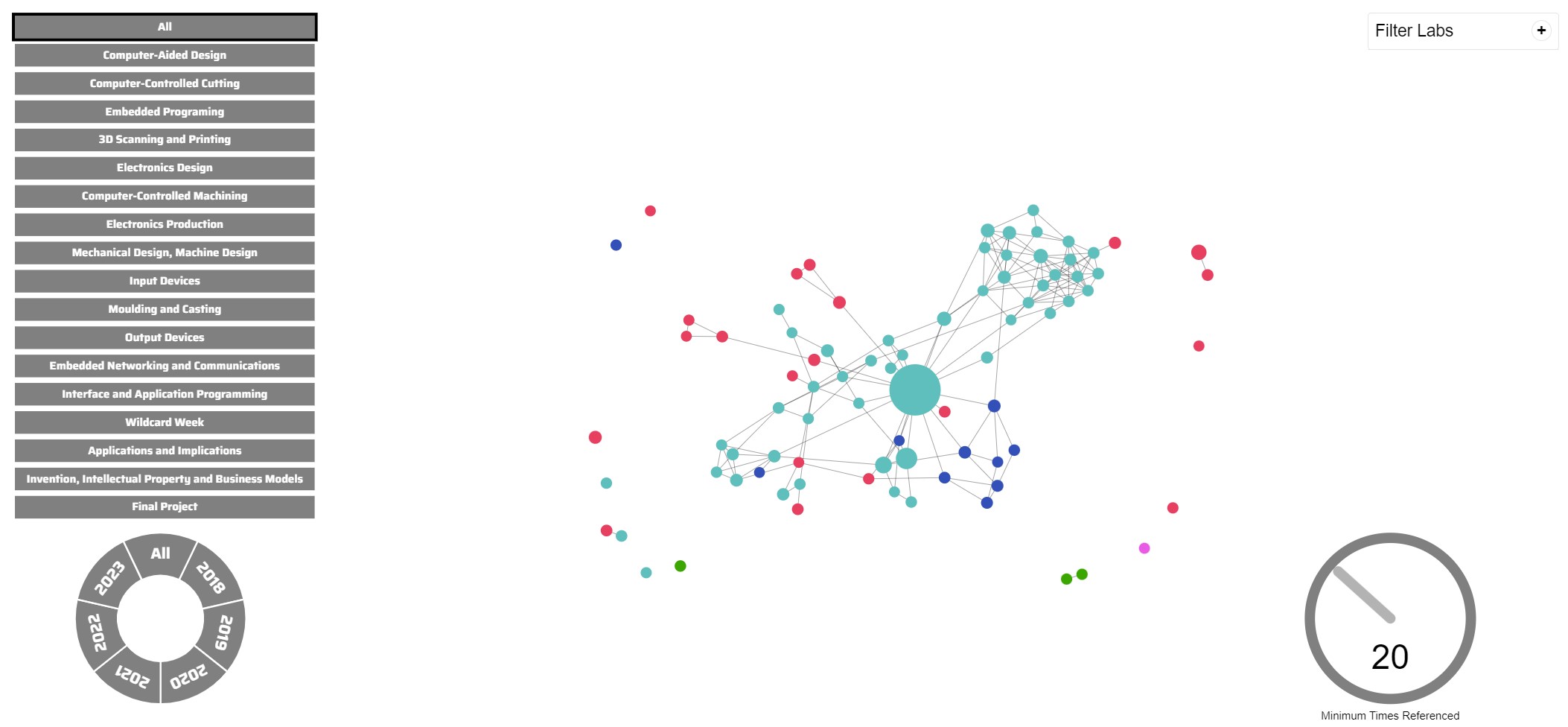

Lastly, I wanted to provide the global Fab community with an easy-to-use navigation tool to locate expert documentation by subject area, as identified in my network analysis. I consulted with Mr. Arroyo and decided to create an interactive force simulation graph using D3JS. In the graph, each node represents a student and each edge represents references between students.

The more times a student’s documentation website has been referenced, the larger the student’s node, corresponding to more expertise. Other data, such as a student’s Fab Lab location and graduation year, were encoded via other visual mediums. I added interactive features, such as filters for subject area, geography, and graduation year, to help students quickly identify experts based on their needs.

Because the first version of the Expert Network Map had a large number of data points reloading in real-time, the tool was too slow to be usable. I made two modifications to optimize performance. First, I used D3JS’ enter-update-exit protocol to change the force simulation graph without reloading all of the data. Next, I collapsed multiple references between the same students into a single edge with greater force. The Expert Network Map is live and will be used by Fab Academy 2024 students.

Documentation

Step 1: Data Collection

Download the Python code for Step 1 here!

Scraping Overview and Pagination Concerns

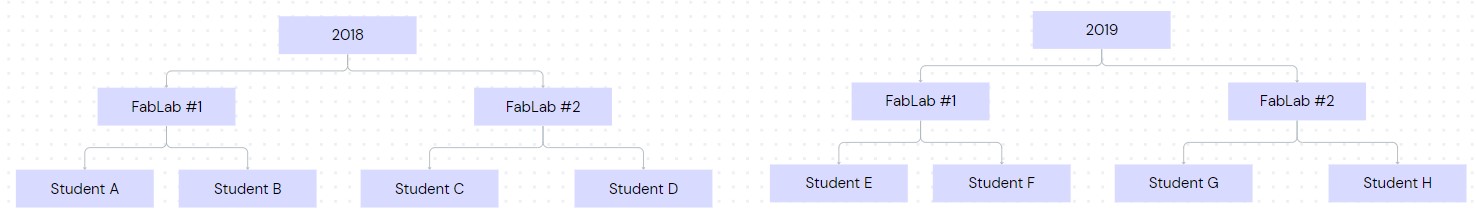

The first step of the project is to collect data on all of the references between Fab Academy students' documentation from 2018-2023. Since every student's documentation website is hosted from a GitLab repo, I wrote a Python script that uses the Python-GitLab API to scan each student's repo using a RegEx.

In my first iteration of the script, I did not realize that the GitLab API paginates to the first twenty projects or repos by default, and the RegEx failed to include links from students between years (for example, a student from 2023 referencing a student from 2018). I realized this error after I had completed part of Step 2 and successfully trained and hyperparameter tuned a neural network to categorize text by subject-area. So, I could have included more training data, however I decided not to re-run the training process since the model had a lot of data to train on even with the pagination and missing references (~13,000 blocks of 2,000 characters of text, approximately 18,000 pages) and achieved a satisfactory accuracy of 86.3%. Most importantly, these students' data and the extra references were included in the network analysis in Step 2, as well as in the data visualization in Step 3. I will show the code with the errors first (the changes were minimal between the versions), then include the altered version in Step 2.

Data Structure

Data was stored in the below structure before being converted to a Pandas dataframe. There was an array of tuples that contained the GitLab ID of a student's lab's subgroup and a dictionary. The dictionary had one key, the name of the student, and had another dictionary as the value. The inner dictionary contained key-value pairs of how many times the student referenced other students' websites.

Subject-Area Keywords

Additionally, I wrote a list of keywords for each subject-area. If the 2,000 characters surrounding a link contained one of the keywords, a new object was stored in a jsonl file containing the text and categorized subject-area. Additionally, self-links were included in the training data. Here's a sample from the collected data.

{"text": "018/labs/fablabamsterdam/students/klein-xavier/pages/week8.html\">check here to know how i did it</a>), i set the clock to <i>internal 8 mhz</i>.\n\ni tried the code <a href=\"https://github.com/maltesemants1/charlieplexing-the-arduino/blob/master/charlieplexing%20sketch\" target=\"_blank\">from the tutorial i followed</a>.<br>\nnow, if you look at images of the board above, something is missing. the regulator. if you're already noticed it you win this \"very good eyes\" cup:<br><br>\n<img src=\"week11/img/cup.gif\" alt=\"\"><br><br>\n\ni try first with the code above, i change the pins, looking to this type of datasheet: <br><br>\n<img src=\"http://fabacademy.org/2018/labs/fablabtrivandrum/students/aby-michael/week9/image/attinypinouts14.jpg\" alt=\"\"><br><br>\n\n i've upload the code and nothing happened. the regulator started to fried!<br>\n it fried because i put it there to regulate a 9v from a battery to 5v. but there was no battery when i tested the code (using the programmer as power supply) and the regulator didn't enjoy it.<br>\n so for the next \"experiments\" i remove it and used only the programmer as power supply.<br><br>\n\n i manage to make charlieplexing working with the good pins, i've made some mods to the code in order to have a back and forth movement (you check this code <a href=\"http://archive.fabacademy.org/2018/labs/fablabamsterdam/students/klein-xavier/pages/week11/file/charlieplexing.ino\">here</a>.):<br><br>\n <img src=\"week11/img/pins.png\" alt=\"\"><br><br>\n here's the beast:<br><br>\n<iframe src=\"https://giphy.com/embed/1lxry3fbt0naiel2gn\" width=\"480\" height=\"266\" frameborder=\"0\" class=\"giphy-embed\" allowfullscreen></iframe><br><br>\n\n<b>the code:</b><br><br>\n\nt\n\n/*<br>\n * charlieplexing code for this board:<br>\n\n * http://archive.fabacademy.org/2018/labs/fablabamsterdam/students/klein-xavier/pages/week11/file/ledboard.zip <br>\n * wtfpl xavier klein <br>\n */ <br>\n\n<br>\n<b>here i have 3 variables related to the 3 pins i dedicated to for each row.</b><br>\n//setting the pin for each row.<br>\nconst int led_1 = 0; //led", "label": "Embedded Programing", "metadata": {"from": "https://fabacademy.org/2018/labs/fablabamsterdam/students/klein-xavier/", "to": "https://fabacademy.org/2018/labs/fablabtrivandrum/students/aby-michael/"}}

Below is a list of the subject-areas and the corresponding keywords (later the subject-areas "Prefab" and "Other" were removed):

TOPICS = [

"Prefab",

"Computer-Aided Design",

"Computer-Controlled Cutting",

"Embedded Programing",

"3D Scanning and Printing",

"Electronics Design",

"Computer-Controlled Machining",

"Electronics Production",

"Mechanical Design, Machine Design",

"Input Devices",

"Moulding and Casting",

"Output Devices",

"Embedded Networking and Communications",

"Interface and Application Programming",

"Wildcard Week",

"Applications and Implications",

"Invention, Intellectual Property and Business Models",

"Final Project",

"Other"

]

TOPIC_SEARCH_TERMS = [

[],

["Computer-Aided Design", "freecad"],

["Laser Cut", "CCC week", "Computer-Controlled Cutting"],

["Embedded Programming", "MicroPython", "C\+\+", "python" "cpp", "ino", "Arduino IDE", "programming week"],

["3D Printing", "3D Scanning", "TPU", "PETG", "filament", "Prusa", "3d printing week", "polyCAM", "3D Scanning and Printing", "3d printers", "3d printer"], # not PLA because in too many other words

["EagleCAD", "Eagle", "KiCAD", "Routing", "Auto-Route", "Trace", "Footprint", "electronic design", "Electronics Design"],

["CNC", "Shopbot", "Computer-Controlled Machining"],

["Mill", "Milling", "copper", "electronic production", "Electronics Production"],

["Machine week", "actuation and automation", "Mechanical Design, Machine Design"],

["Input Devices", "Input Device", "Inputs Devices", "Electronic input", "sensor", "Input Devices"],

["Part A", "Part B", "pot time", "pottime", "molding", "moulding", "casting", "cast", "Moulding and Casting"],

["Output Device", "Outputs Devices", "Outputs Device", "Servo", "motor", "Output Devices"],

["SPI", "UART", "I2C", "RX", "TX", "SCL", "networking week", "networking", "network", "networking and communications", "Embedded Networking and Communications"],

["Interfacing Week", "interface week", "Interface and Application Programming"],

["Wildcard Week"],

["Applications and Implications", "Bill-of-Materials", "Bill of materials"],

["Patent", "copyright", "trademark", "Invention, Intellectual Property and Business Models"],

["Final Project"],

[]

]

GitLab Structure

The Fab Academy student GitLab repos are structured as follows:

So it worked down the tree, starting with each year's subgroup and traversing down the tree breadth-first. Helper functions are included in the full main_collection.py below.

for year in range(2018, 2024):

print("Loading student names...")

all_student_names = get_all_people(year)

print(all_student_names)

print("Collecting student repo IDs...")

all_student_repo_ids = get_all_student_repo_ids(year, ALL_LAB_SUBGROUP_IDS[year], all_student_names)

print(all_student_repo_ids)

all_reference_dicts = [] # [(lab_id: id (int), {"Student Name": {"student-referenced": num_references (int), ...}}), ...]

for lab_number, id in all_student_repo_ids:

reference_dict_list = []

compiled_reference_dict = get_all_reference_dicts(year, id)

print("Adding reference dictionary to database...")

all_reference_dicts.append((lab_number, {format_name(get_repo_name(id), year): compiled_reference_dict}))

print(f"All reference dictionaries so far... {all_reference_dicts}")

reference_dicts_across_years.append(all_reference_dicts)

matrix = format_data_to_matrix(reference_dicts_across_years)

Since all links to another's website in documentation should be found in either an HTML, TXT, or Markdown file, I only scanned for references in these file types. I also provided the Subgroup IDs of the overal Fab Academy GitLab Projects for the years 2018-2023.

VALID_EXTENSOINS = ["html", "txt", "md"]

ALL_LAB_SUBGROUP_IDS = {

2023: 8145,

2022: 3632,

2021: 2917,

2020: 2140,

2019: 1619,

2018: 852

}

In order to decrease run-time when running the script multiple times, certain information, such as the list of every student's names from the fabacademy.org website, are pickled and stored locally.

Full Code

Here is the entire main_collection.py file with helper functions.

main_collection.py

import requests, base64, urllib.parse, gitlab, re, pickle, os, time, json

from bs4 import BeautifulSoup

import pandas as pd

from urllib.parse import urljoin

GL = gitlab.Gitlab('https://gitlab.fabcloud.org', api_version=4)

VALID_EXTENSOINS = ["html", "txt", "md"]

ALL_LAB_SUBGROUP_IDS = {

2023: 8145,

2022: 3632,

2021: 2917,

2020: 2140,

2019: 1619,

2018: 852

}

CHARACTERS_EACH_DIRECTION_TOPIC_DETECTION = 1000

TOPICS = [

"Prefab",

"Computer-Aided Design",

"Computer-Controlled Cutting",

"Embedded Programing",

"3D Scanning and Printing",

"Electronics Design",

"Computer-Controlled Machining",

"Electronics Production",

"Mechanical Design, Machine Design",

"Input Devices",

"Moulding and Casting",

"Output Devices",

"Embedded Networking and Communications",

"Interface and Application Programming",

"Wildcard Week",

"Applications and Implications",

"Invention, Intellectual Property and Business Models",

"Final Project",

"Other"

]

TOPIC_SEARCH_TERMS = [

[],

["Computer-Aided Design", "freecad"],

["Laser Cut", "CCC week", "Computer-Controlled Cutting"],

["Embedded Programming", "MicroPython", "C\+\+", "python" "cpp", "ino", "Arduino IDE", "programming week"],

["3D Printing", "3D Scanning", "TPU", "PETG", "filament", "Prusa", "3d printing week", "polyCAM", "3D Scanning and Printing", "3d printers", "3d printer"], # not PLA because in too many other words

["EagleCAD", "Eagle", "KiCAD", "Routing", "Auto-Route", "Trace", "Footprint", "electronic design", "Electronics Design"],

["CNC", "Shopbot", "Computer-Controlled Machining"],

["Mill", "Milling", "copper", "electronic production", "Electronics Production"],

["Machine week", "actuation and automation", "Mechanical Design, Machine Design"],

["Input Devices", "Input Device", "Inputs Devices", "Electronic input", "sensor", "Input Devices"],

["Part A", "Part B", "pot time", "pottime", "molding", "moulding", "casting", "cast", "Moulding and Casting"],

["Output Device", "Outputs Devices", "Outputs Device", "Servo", "motor", "Output Devices"],

["SPI", "UART", "I2C", "RX", "TX", "SCL", "networking week", "networking", "network", "networking and communications", "Embedded Networking and Communications"],

["Interfacing Week", "interface week", "Interface and Application Programming"],

["Wildcard Week"],

["Applications and Implications", "Bill-of-Materials", "Bill of materials"],

["Patent", "copyright", "trademark", "Invention, Intellectual Property and Business Models"],

["Final Project"],

[]

]

# return file contents from GitLab repo

def get_file_content(file_path, project_id, default_branch_name):

print(f"Getting file content: {file_path}, {project_id}")

safe_url = f"https://gitlab.fabcloud.org/api/v4/projects/{project_id}/repository/files/{urllib.parse.quote(file_path, safe='')}?ref={default_branch_name}"

print(safe_url)

response = requests.get(safe_url).json()

if 'message' in response:

if '404' in response['message']:

print(f"404 ERROR from {safe_url}")

return

encrypted_blob = response['content']

decrypted_text = base64.b64decode(encrypted_blob)

return decrypted_text

# get name of GitLab repo

def get_repo_name(project_id):

safe_url = f"https://gitlab.fabcloud.org/api/v4/projects/{project_id}"

print(safe_url)

response = requests.get(safe_url).json()

web_url = response['web_url']

if 'message' in response:

if '404' in response['message']:

print(f"404 ERROR from {safe_url}")

return

return response['name'], gitlab_url_to_site_url(f"{web_url}/") # / to make it consistent with the name lists with urljoin and the a's hrefs

# get a list of the subgroups of a GitLab project

def get_file_repo_list(id):

all_file_paths = []

project = GL.projects.get(id)

all_directories = project.repository_tree(recursive=True, all=True)

for item in all_directories:

path = item['path']

if path.split('.')[-1].lower().strip() in VALID_EXTENSOINS:

all_file_paths.append(path)

return all_file_paths

# get the IDs of the subgroups of a GitLab project

def get_subgroup_ids(group_id):

safe_url = f"https://gitlab.fabcloud.org/api/v4/groups/{group_id}/subgroups"

response = None

while response is None:

try:

response = requests.get(safe_url).json()

except Exception as e:

print(f"Server timeout- {e}")

time.sleep(10)

p_ids = []

for item in response:

try:

p_ids.append(item['id'])

except:

print("Error finding subgroups -- skipping")

return p_ids

# get the IDs of subgprojects of a GitLab project

def get_subproject_ids(group_id):

safe_url = f"https://gitlab.fabcloud.org/api/v4/groups/{group_id}/projects"

response = requests.get(safe_url).json()

p_ids = []

for item in response:

p_ids.append(item['id'])

return p_ids

def name_split_char(year):

return "." if year < 2021 else " "

# convert a URL to a GitLab repo to a URL to the hosted website

def gitlab_url_to_site_url(gitlab_url):

return f"https://fabacademy.org/{gitlab_url.split('https://gitlab.fabcloud.org/academany/fabacademy/')[-1]}"

# format student's name to create a unique identifier for each student

def format_name(name_url_tup, year, tup=True):

if tup:

name, web_url = name_url_tup

return f'{"-".join(name.lower().strip().split(name_split_char(year)))};{web_url}'

else:

print("Warning: name generated without URL")

name = name_url_tup

return "-".join(name.lower().strip().split(name_split_char(year)))

# remove all links to websites that are not students' repos

def filter_only_student_repos(all_student_repo_ids, all_student_names, year):

filtered_ids = []

all_student_urls = [name.split(";")[1].strip() for name in all_student_names]

for i, id_list in all_student_repo_ids:

for id in id_list:

_, web_url = get_repo_name(id)

if web_url.strip()[:-1] in all_student_urls or web_url.strip() in all_student_urls: # [:-1] to remove ending slash

filtered_ids.append((i, id))

return filtered_ids

# get the repo IDs of all fab Academy students

def get_all_student_repo_ids(year, year_subgroup_id, all_student_names):

if save_exists("student_repo_id_saves", year):

return load_obj("student_repo_id_saves", year)

all_student_repo_ids = []

all_lab_ids = get_subgroup_ids(year_subgroup_id)

for id in all_lab_ids:

for sub_id in get_subgroup_ids(id):

all_student_repo_ids.append((id, get_subproject_ids(sub_id))) # ((i, get_subproject_ids(sub_id)))

print(all_student_repo_ids)

to_return = filter_only_student_repos(all_student_repo_ids, all_student_names, year)

save_obj("student_repo_id_saves", to_return, year)

return to_return

def save_exists(folder_name, name):

return os.path.exists(f"{folder_name}/{name}.obj")

def load_obj(folder_name, name):

with open(f"{folder_name}/{name}.obj", "rb") as filehandler:

return pickle.load(filehandler)

def save_obj(folder_name, obj, name):

with open(f"{folder_name}/{name}.obj", 'wb') as filehandler:

pickle.dump(obj, filehandler)

# go to fabacademy.org website to find student roster and all students' names and links to their websites

def get_all_people(year):

if save_exists("people_saves", year):

return load_obj("people_saves", year)

base_url = f"https://fabacademy.org/{year}/people.html"

soup = BeautifulSoup(requests.get(base_url).content, 'html.parser')

if year > 2018:

lab_divs = soup.find_all("div", {"class": "lab"})

names = []

for lab_div in lab_divs:

lis = lab_div.find_all("li")

As = [li.find("a") for li in lis]

names += [f"{a.text.strip().lower().replace(' ', '-')};{urljoin(base_url, a['href'])}" for a in As]

else:

lis = soup.find_all("li")

names = [f"{li.find('a').text.strip().lower().replace(' ', '-')};{urljoin(base_url, li.find('a')['href'])}" for li in lis]

save_obj("people_saves", names, year)

return names

# scan a repo for references to another student's documentation

def get_references(content, year, from_url):

pattern = re.compile(f"\/{year}\/labs\/[^\/]+\/students\/(\w|-)+\/")

people_linked = {}

for match in pattern.finditer(str(content)):

full_url = f"https://fabacademy.org{match.group(0)}"

print(f"FULL URL {full_url}")

person = format_name((match.group(0).split("/")[-2], full_url), year)

link_label = None

topic_search_start_ind = match.start() - CHARACTERS_EACH_DIRECTION_TOPIC_DETECTION

if topic_search_start_ind < 0:

topic_search_start_ind = 0

topic_search_end_ind = match.end() + CHARACTERS_EACH_DIRECTION_TOPIC_DETECTION

if topic_search_end_ind > len(content):

topic_search_end_ind = len(content)

print(f"({match.start()}, {match.end()}) -> ({topic_search_start_ind}, {topic_search_end_ind})")

topic_text = content.decode()[topic_search_start_ind:topic_search_end_ind].lower()

print("TOPIC TEXT", topic_text)

for i in range(len(TOPICS)):

topic = TOPICS[i]

topic_search_terms = TOPIC_SEARCH_TERMS[i]

for item in topic_search_terms:

item_spaces = item.replace("-", " ").replace("/", " ").replace(",", " ").lower().strip()

if re.search(re.compile(item_spaces.replace(" ",".")), topic_text) or re.search(re.compile(item_spaces.replace(" ","-")), topic_text) or re.search(re.compile(item_spaces), topic_text) or re.search(re.compile(item_spaces.replace(" ","")), topic_text):

link_label = topic

if link_label is None:

continue

with open("NLP_data/train.jsonl", "a") as file:

file.write('{' + '"text": ' + json.dumps(topic_text) + ', "label": "' + link_label + '", "metadata": '+ '{' + '"from": "' + from_url + '", "to": "' + full_url + '"' + '}' + '}\n')

if person in people_linked:

if link_label in people_linked[person]:

people_linked[person][link_label] += 1

else:

people_linked[person] = {}

people_linked[person][link_label] = 1

return people_linked

# combine students' dictionaries of references to other's websites

def combine_reference_dicts(reference_dict_list):

combined_reference_dict = {}

for dict in reference_dict_list:

for key in dict:

if key in combined_reference_dict:

for topic_key in dict[key]:

if topic_key in combined_reference_dict[key]:

combined_reference_dict[key][topic_key] += dict[key][topic_key]

else:

combined_reference_dict[key][topic_key] = dict[key][topic_key]

else:

combined_reference_dict[key] = dict[key]

return combined_reference_dict

# convert data to Pandas crosstab matrix

def format_data_to_matrix(data):

students = []

for year_info in data: # [(lab_id: int, {"Student Name": {"student-referenced": num_references: int, ...}}), ...]

for student_info in year_info: # (lab_id: int, {"Student Name": {"student-referenced": num_references: int, ...}})

lab_id = student_info[0]

student_name = list(student_info[1].keys())[0]

reference_dict = student_info[1][list(student_info[1].keys())[0]]

students.append(student_name)

print("STUDENTS", students)

df = pd.crosstab(students, students)

df.rename_axis("Referencing Students", axis=0, inplace=True)

df.rename_axis("Referenced Students", axis=1, inplace=True)

df = pd.DataFrame(df, index=df.index, columns=pd.MultiIndex.from_product([df.columns, TOPICS]))

for student1 in students:

for student2 in students:

for topic_name in TOPICS:

df.at[student1, (student2, topic_name)] = (pd.NA if student1 == student2 else 0)

def assign_value(referencer_student, referenced_student, num_references, topic):

if referencer_student == referenced_student:

print(f"Ignoring self-referenced student {referencer_student}")

elif referenced_student not in students:

print("Referenced student isn't a student - URL matched naming convention so regex caught but wasn't checked against student list - skipping")

else:

df.loc[referencer_student, (referenced_student, topic)] = num_references

def get_value(referencer_student, referenced_student, topic):

return df.loc[referencer_student, (referenced_student, topic)]

for year_info in data:

for student_info in year_info:

student_name = list(student_info[1].keys())[0]

reference_dict = dict(student_info[1][student_name])

for referenced_student in reference_dict:

for reference_type in reference_dict[referenced_student]:

num_references = reference_dict[referenced_student][reference_type]

assign_value(student_name, referenced_student, num_references, reference_type)

df.to_csv("final_data.csv", index_label="Referencing Students|Referenced Students")

return df

# get all of the reference dictionaries of different students for a given year

def get_all_reference_dicts(year, id):

filename = f"{year}-{id}"

if save_exists("reference_dict_saves", filename):

print(f"Save exists! {load_obj('reference_dict_saves', filename)}")

return load_obj("reference_dict_saves", filename)

reference_dict_list = []

default_branch_name_response = requests.get(f"https://gitlab.fabcloud.org/api/v4/projects/{id}/repository/branches").json()

default_branch_name = None

for branch in default_branch_name_response:

if branch['default']:

default_branch_name = branch['name']

if default_branch_name is None:

print(f"Error: Default Branch Not Found (id: {id}) {default_branch_name_response} - leaving as None")

try:

for file in get_file_repo_list(id):

print(f"Checking {file}...")

reference_dict_list.append(get_references(get_file_content(file, id, default_branch_name), year, get_repo_name(id)[1]))

print("Generating compiled reference dictionary...")

compiled_reference_dict = combine_reference_dicts(reference_dict_list)

print(compiled_reference_dict)

save_obj("reference_dict_saves", compiled_reference_dict, filename)

print(f"SAVING to {filename}")

return compiled_reference_dict

except gitlab.exceptions.GitlabGetError as e:

return combine_reference_dicts(reference_dict_list)

if __name__ == "__main__":

reference_dicts_across_years = [] # [[(lab_id: id (int), {"Student Name": {"student-referenced": num_references: (int), ...}}), ...], ...]

for year in range(2018, 2024):

print("Loading student names...")

all_student_names = get_all_people(year)

print(all_student_names)

print("Collecting student repo IDs...")

all_student_repo_ids = get_all_student_repo_ids(year, ALL_LAB_SUBGROUP_IDS[year], all_student_names)

print(all_student_repo_ids)

all_reference_dicts = [] # [(lab_id: id (int), {"Student Name": {"student-referenced": num_references: 9d (int), ...}}), ...]

for lab_number, id in all_student_repo_ids:

reference_dict_list = []

compiled_reference_dict = get_all_reference_dicts(year, id)

print("Adding reference dictionary to database...")

all_reference_dicts.append((lab_number, {format_name(get_repo_name(id), year): compiled_reference_dict}))

print(f"All reference dictionaries so far... {all_reference_dicts}")

reference_dicts_across_years.append(all_reference_dicts)

matrix = format_data_to_matrix(reference_dicts_across_years)

Step 2: AI, Sorting Data & Analysis

Download the Python code and all other files for Step 2 here! (10.6 MB)

Download only the Python code for Step 2 here!

Training the Model

Before running the training, I had to format the trianing data from a jsonl to a csv file. So, I wrote jsonl2csv.py to convert the JSON objects into CSV format. I used a number to signify each subject-area (each will correspond to an output node in the neural network) and save the exported dataframe as NLP_data/train.csv.

jsonl2csv.py

import json, string

import pandas as pd

DATA_LABELS = {

"Prefab": 0,

"Computer-Aided Design": 1,

"Computer-Controlled Cutting": 2,

"Embedded Programing": 3,

"3D Scanning and Printing": 4,

"Electronics Design": 5,

"Computer-Controlled Machining": 6,

"Electronics Production": 7,

"Mechanical Design, Machine Design": 8,

"Input Devices": 9,

"Moulding and Casting": 10,

"Output Devices": 11,

"Embedded Networking and Communications": 12,

"Interface and Application Programming": 13,

"Wildcard Week": 14,

"Applications and Implications": 15,

"Invention, Intellectual Property and Business Models": 16,

"Final Project": 17,

"Other": 18

}

if __name__ == "__main__":

txts = []

labels = []

tos = []

froms = []

with open('NLP_data/train.jsonl', 'r') as jsonl:

for line in jsonl.readlines():

j = json.loads(line)

txts.append("".join([char for char in j['text'] if char in set(string.printable)])) # string.printable removes non-ASCII chars to avoid writing errors

labels.append(DATA_LABELS[j['label']])

tos.append(j['metadata']['to'])

froms.append(j['metadata']['from'])

data_dict = {

"Text": txts,

"Labels": labels,

"To": tos,

"From": froms

}

df = pd.DataFrame(data_dict)

df.to_csv("NLP_data/train.csv")

To train the model in train_nn.py, I used the Python machine-learning libraries PyTorch and Sci-Kit Learn. I'll break the code into sections then provide the entire file below.

First, I loaded the CSV with Pandas, stored the 2,000 blocks and their lables in two series, and used train_test_split from sklearn.model_selection to put 80% of the data into a training set and 20% into a testing set.

# Read the CSV file

df = pd.read_csv('NLP_data/train.csv')

# Columns are named 'Text' and 'Labels'

texts = df['Text']

labels = df['Labels']

# Split the dataset into training and test sets

X_train, X_test, y_train, y_test = train_test_split(texts, labels, test_size=0.2, random_state=42)

Then, using the CountVectorizer from sklearn.feature_extraction.text, I vectorized the training and test data. You can read about the CountVectorizer here.

# Convert the texts into vectors

vectorizer = CountVectorizer(max_features=5000) # limit to 5000 most frequent words/tokens

X_train = vectorizer.fit_transform(X_train)

X_test = vectorizer.transform(X_test)

I pickled and saved the vectorizer object.

Next I converted the train and test data to NumPy arrays then PyTorch tensors.

# Convert the vectors and labels to numpy arrays

X_train = X_train.toarray()

X_test = X_test.toarray()

y_train = y_train.to_numpy()

y_test = y_test.to_numpy()

X_train = torch.from_numpy(X_train).float()

X_test = torch.from_numpy(X_test).float()

y_train = torch.from_numpy(y_train).long()

y_test = torch.from_numpy(y_test).long()

Then I defined the TextClassifer neural network class. There are three layers: input, hidden, and output. Adjacent layers are fully connected (FC) (also called linear layers). The network also implements dropout and the ReLU activaition function before the hidden layer.

class TextClassifier(nn.Module):

def __init__(self, input_dim, hidden_dim, output_dim, dropout_prob=0.5):

super(TextClassifier, self).__init__()

self.fc1 = nn.Linear(input_dim, hidden_dim)

self.dropout = nn.Dropout(dropout_prob)

self.fc2 = nn.Linear(hidden_dim, output_dim)

def forward(self, x):

x = torch.relu(self.fc1(x))

x = self.dropout(x)

x = self.fc2(x)

return x

Next, to begin finding the optimal hyperparameter combinations, I defined a list of values of learning rate and dropout rate to try, as well as a function to train the network with a specified value for each of these hyperparameters. The dimensions of the network are 5000x200x18. The training runs 100 epochs using the Adam optimizer and CrossEntropyLoss function. Then the model performance evaluated. The train_model function returns the model accuracy (measured on the test set data) and the model itself.

# define grid of hyperparameters

learning_rates = [0.1, 0.01, 0.001]

dropout_rates = [0, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9]

# define a function for the training loop

def train_model(lr, dropout_rate):

model = TextClassifier(input_dim=X_train.shape[1], hidden_dim=200, output_dim=18, dropout_prob=dropout_rate)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=lr)

for epoch in range(100):

optimizer.zero_grad()

outputs = model(X_train)

loss = criterion(outputs, y_train)

loss.backward()

optimizer.step()

# Evaluate the model

with torch.no_grad():

outputs = model(X_test)

_, predicted = torch.max(outputs, 1)

correct = (predicted == y_test).sum().item()

accuracy = correct / y_test.size(0)

return accuracy, model

Then to implement the gridsearch, I loop through the combinations of learning rate and dropout rate, training and evaluating a neural network then storing its parameters and the model itself if the accuracy is better than all previous accuracies.

# Perform the grid search

best_accuracy = 0.0

best_lr = None

best_dropout_rate = None

best_model = None

for lr in learning_rates:

for dropout_rate in dropout_rates:

accuracy, model = train_model(lr, dropout_rate)

print(f'Learning rate: {lr}, Dropout rate: {dropout_rate}, Accuracy: {accuracy}')

if accuracy > best_accuracy:

best_accuracy = accuracy

best_lr = lr

best_dropout_rate = dropout_rate

best_model = model

Finally, the results of the best model are printed, the best model is saved in the best_model.pt file, and the best parameters are pickled and saved in best_params.pickle.

print(f'Best learning rate: {best_lr}, Best dropout rate: {best_dropout_rate}, Best accuracy: {best_accuracy}')

# Save the best model to a file

torch.save(best_model.state_dict(), 'best_model.pt')

# Also save the parameters in a dictionary

best_params = {"learning_rate": best_lr, "dropout_rate": best_dropout_rate, "accuracy": best_accuracy}

with open('best_params.pickle', 'wb') as handle:

pickle.dump(best_params, handle, protocol=pickle.HIGHEST_PROTOCOL)

All together, here is train_nn.py.

train_nn.py

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.text import CountVectorizer

import torch

import torch.nn as nn

import torch.optim as optim

from sklearn.metrics import accuracy_score

import pickle

# Read the CSV file

df = pd.read_csv('NLP_data/train.csv')

# Columns are named 'Text' and 'Labels'

texts = df['Text']

labels = df['Labels']

# Split the dataset into training and test sets

X_train, X_test, y_train, y_test = train_test_split(texts, labels, test_size=0.2, random_state=42)

# Convert the texts into vectors

vectorizer = CountVectorizer(max_features=5000) # limit to 5000 most frequent words/tokens

X_train = vectorizer.fit_transform(X_train)

X_test = vectorizer.transform(X_test)

# Save the vectorizer

pickle.dump(vectorizer, open("vectorizer.pickle", "wb"))

# Convert the vectors and labels to numpy arrays

X_train = X_train.toarray()

X_test = X_test.toarray()

y_train = y_train.to_numpy()

y_test = y_test.to_numpy()

X_train = torch.from_numpy(X_train).float()

X_test = torch.from_numpy(X_test).float()

y_train = torch.from_numpy(y_train).long()

y_test = torch.from_numpy(y_test).long()

class TextClassifier(nn.Module):

def __init__(self, input_dim, hidden_dim, output_dim, dropout_prob=0.5):

super(TextClassifier, self).__init__()

self.fc1 = nn.Linear(input_dim, hidden_dim)

self.dropout = nn.Dropout(dropout_prob)

self.fc2 = nn.Linear(hidden_dim, output_dim)

def forward(self, x):

x = torch.relu(self.fc1(x))

x = self.dropout(x)

x = self.fc2(x)

return x

# define grid of hyperparameters

learning_rates = [0.1, 0.01, 0.001]

dropout_rates = [0, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9]

# define a function for the training loop

def train_model(lr, dropout_rate):

model = TextClassifier(input_dim=X_train.shape[1], hidden_dim=200, output_dim=18, dropout_prob=dropout_rate)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=lr)

for epoch in range(100):

optimizer.zero_grad()

outputs = model(X_train)

loss = criterion(outputs, y_train)

loss.backward()

optimizer.step()

# Evaluate the model

with torch.no_grad():

outputs = model(X_test)

_, predicted = torch.max(outputs, 1)

correct = (predicted == y_test).sum().item()

accuracy = correct / y_test.size(0)

return accuracy, model

# Perform the grid search

best_accuracy = 0.0

best_lr = None

best_dropout_rate = None

best_model = None

for lr in learning_rates:

for dropout_rate in dropout_rates:

accuracy, model = train_model(lr, dropout_rate)

print(f'Learning rate: {lr}, Dropout rate: {dropout_rate}, Accuracy: {accuracy}')

if accuracy > best_accuracy:

best_accuracy = accuracy

best_lr = lr

best_dropout_rate = dropout_rate

best_model = model

print(f'Best learning rate: {best_lr}, Best dropout rate: {best_dropout_rate}, Best accuracy: {best_accuracy}')

# Save the best model to a file

torch.save(best_model.state_dict(), 'best_model.pt')

# Also save the parameters in a dictionary

best_params = {"learning_rate": best_lr, "dropout_rate": best_dropout_rate, "accuracy": best_accuracy}

with open('best_params.pickle', 'wb') as handle:

pickle.dump(best_params, handle, protocol=pickle.HIGHEST_PROTOCOL)

To load the model, I wrote load_model_and_classify.py. The script defines classes for importing the pickled neural network and using it to classify references.

load_model_and_classify.py

import pandas as pd

from sklearn.feature_extraction.text import CountVectorizer

import torch

import torch.nn as nn

import pickle

class TextClassifier(nn.Module):

def __init__(self, input_dim, hidden_dim, output_dim, dropout_prob=0.5):

super(TextClassifier, self).__init__()

self.fc1 = nn.Linear(input_dim, hidden_dim)

self.dropout = nn.Dropout(dropout_prob)

self.fc2 = nn.Linear(hidden_dim, output_dim)

def forward(self, x):

x = torch.relu(self.fc1(x))

x = self.dropout(x)

x = self.fc2(x)

return x

class Classifier(object):

def __init__(self):

# Load the saved parameters

with open('best_params.pickle', 'rb') as handle:

self.best_params = pickle.load(handle)

# Load the vectorizer

self.vectorizer = pickle.load(open("vectorizer.pickle", "rb"))

self.input_dim = len(self.vectorizer.get_feature_names_out())

# Initialize and load the saved model

self.model = TextClassifier(input_dim=self.input_dim, hidden_dim=200, output_dim=18, dropout_prob=self.best_params['dropout_rate'])

self.model.load_state_dict(torch.load('best_model.pt'))

def ClassifyDF(self, df):

# Let's assume your new data only has a 'Text' column

texts = df['Text']

# Convert the texts into vectors

X = self.vectorizer.transform(texts)

# Convert the vectors to PyTorch tensors

X = torch.from_numpy(X.toarray()).float()

# Predict labels for the new data

with torch.no_grad():

outputs = self.model(X)

_, predicted = torch.max(outputs, 1)

# Add the predicted labels to the DataFrame

df['Predicted Labels'] = predicted.numpy()

return df

def classifyItem(self, content):

texts = [content]

# Convert the texts into vectors

X = self.vectorizer.transform(texts)

# Convert the vectors to PyTorch tensors

X = torch.from_numpy(X.toarray()).float()

# Predict labels for the new data

with torch.no_grad():

outputs = self.model(X)

_, predicted = torch.max(outputs, 1)

return predicted.numpy()[0]

Classifying References

Now that I have a neural network to classify references between students, I revised main_collection.py to main.py, implementing topic classification. I'll first point out the significant changes then display the entire file.

First, I fixed the pagination bug from Step 1 in three places:

get_file_repo_listfunction

# original

all_directories = project.repository_tree(recursive=True, all=True)

# fixed

all_directories = project.repository_tree(recursive=True, all=True, per_page=200)

get_subgroup_idsfunction

# original

safe_url = f"https://gitlab.fabcloud.org/api/v4/groups/{group_id}/subgroups"

# fixed

safe_url = f"https://gitlab.fabcloud.org/api/v4/groups/{group_id}/subgroups?per_page=999999"

get_subproject_idsfunction

# original

safe_url = f"https://gitlab.fabcloud.org/api/v4/groups/{group_id}/projects"

# fixed

safe_url = f"https://gitlab.fabcloud.org/api/v4/groups/{group_id}/projects?per_page=99999"

I also fixed the bug where only references between students of the same years are counted.

get_referencesfunction

# original

pattern = re.compile(f"\/{year}\/labs\/[^\/]+\/students\/(\w|-)+\/")

# fixed

pattern = re.compile(f"\/20[0-9][0-9]\/labs\/[^\/]+\/students\/(\w|-)+\/")

Second, the script no longer stores references that have a subject-area keyword in a jsonl file, and it uses the neural network to classify all of the references that don't have keywords.

in get_references function

# original

if link_label is None:

continue

with open("NLP_data/train.jsonl", "a") as file:

file.write('{' + '"text": ' + json.dumps(topic_text) + ', "label": "' + link_label + '", "metadata": '+ '{' + '"from": "' + from_url + '", "to": "' + full_url + '"' + '}' + '}\n')

if person in people_linked:

if link_label in people_linked[person]:

people_linked[person][link_label] += 1

else:

people_linked[person] = {}

people_linked[person][link_label] = 1

# new

if link_label is None:

link_label = TOPICS[classifier.classifyItem(content)]

if person in people_linked:

if link_label in people_linked[person]:

people_linked[person][link_label] += 1

else:

people_linked[person] = {}

people_linked[person][link_label] = 1

Finally, the output matrix, final_data.csv, now sorts references by subject-area.

in format_data_to_matrix function

# original

print("STUDENTS", students)

df = pd.crosstab(students, students)

df.rename_axis("Referencing Students", axis=0, inplace=True)

df.rename_axis("Referenced Students", axis=1, inplace=True)

df = pd.DataFrame(df, index=df.index, columns=pd.MultiIndex.from_product([df.columns, TOPICS]))

for student1 in students:

for student2 in students:

for topic_name in TOPICS:

df.at[student1, (student2, topic_name)] = (pd.NA if student1 == student2 else 0)

def assign_value(referencer_student, referenced_student, num_references, topic):

if referencer_student == referenced_student:

print(f"Ignoring self-referenced student {referencer_student}")

elif referenced_student not in students:

print("Referenced student isn't a student - URL matched naming convention so regex caught but wasn't checked against student list - skipping")

else:

df.loc[referencer_student, (referenced_student, topic)] = num_references

def get_value(referencer_student, referenced_student, topic):

return df.loc[referencer_student, (referenced_student, topic)]

for year_info in data:

for student_info in year_info:

student_name = list(student_info[1].keys())[0]

reference_dict = dict(student_info[1][student_name])

for referenced_student in reference_dict:

for reference_type in reference_dict[referenced_student]:

num_references = reference_dict[referenced_student][reference_type]

assign_value(student_name, referenced_student, num_references, reference_type)

df.to_csv("final_data.csv", index_label="Referencing Students|Referenced Students")

return df

# new

students_links = [X.split(";")[1] for X in students]

link_student_dict = {}

for i in range(len(students)):

link_student_dict[students_links[i]] = students[i]

print("STUDENTS", students)

df = pd.crosstab(students, students)

df.rename_axis("Referencing Students", axis=0, inplace=True)

df.rename_axis("Referenced Students", axis=1, inplace=True)

df = pd.DataFrame(df, index=df.index, columns=pd.MultiIndex.from_product([df.columns, TOPICS]))

for student1 in students:

for student2 in students:

for topic_name in TOPICS:

df.at[student1, (student2, topic_name)] = (pd.NA if student1 == student2 else 0)

def assign_value(referencer_student, referenced_student, num_references, topic):

if referencer_student == referenced_student:

print(f"Ignoring self-referenced student {referencer_student}")

elif referenced_student not in students:

if referenced_student.split(";")[1] in students_links:

assign_value(referencer_student, link_student_dict[referenced_student.split(";")[1]], num_references, topic)

else:

print("Referenced student isn't a student - URL matched naming convention so regex caught but wasn't checked against student list - skipping")

else:

df.loc[referencer_student, (referenced_student, topic)] = num_references

def get_value(referencer_student, referenced_student, topic):

return df.loc[referencer_student, (referenced_student, topic)]

for year_info in data:

for student_info in year_info:

student_name = list(student_info[1].keys())[0]

reference_dict = dict(student_info[1][student_name])

for referenced_student in reference_dict:

for reference_type in reference_dict[referenced_student]:

num_references = reference_dict[referenced_student][reference_type]

assign_value(student_name, referenced_student, num_references, reference_type)

df.to_csv("final_data.csv", index_label="Referencing Students|Referenced Students")

return df

All together, here is main.py.

main.py

import requests, base64, urllib.parse, gitlab, re, pickle, os, time, json

from bs4 import BeautifulSoup

import pandas as pd

from urllib.parse import urljoin

from load_model_and_classify import Classifier

GL = gitlab.Gitlab('https://gitlab.fabcloud.org', api_version=4)

VALID_EXTENSOINS = ["html", "txt", "md"]

ALL_LAB_SUBGROUP_IDS = {

2023: 8145,

2022: 3632,

2021: 2917,

2020: 2140,

2019: 1619,

2018: 852

}

CHARACTERS_EACH_DIRECTION_TOPIC_DETECTION = 1000

TOPICS = [

"Prefab",

"Computer-Aided Design",

"Computer-Controlled Cutting",

"Embedded Programing",

"3D Scanning and Printing",

"Electronics Design",

"Computer-Controlled Machining",

"Electronics Production",

"Mechanical Design, Machine Design",

"Input Devices",

"Moulding and Casting",

"Output Devices",

"Embedded Networking and Communications",

"Interface and Application Programming",

"Wildcard Week",

"Applications and Implications",

"Invention, Intellectual Property and Business Models",

"Final Project",

"Other"

]

TOPIC_SEARCH_TERMS = [

[],

["Computer-Aided Design", "freecad"],

["Laser Cut", "CCC week", "Computer-Controlled Cutting"],

["Embedded Programming", "MicroPython", "C\+\+", "python" "cpp", "ino", "Arduino IDE", "programming week"],

["3D Printing", "3D Scanning", "TPU", "PETG", "filament", "Prusa", "3d printing week", "polyCAM", "3D Scanning and Printing", "3d printers", "3d printer"], # not PLA because in too many other words

["EagleCAD", "Eagle", "KiCAD", "Routing", "Auto-Route", "Trace", "Footprint", "electronic design", "Electronics Design"],

["CNC", "Shopbot", "Computer-Controlled Machining"],

["Mill", "Milling", "copper", "electronic production", "Electronics Production"],

["Machine week", "actuation and automation", "Mechanical Design, Machine Design"],

["Input Devices", "Input Device", "Inputs Devices", "Electronic input", "sensor", "Input Devices"],

["Part A", "Part B", "pot time", "pottime", "molding", "moulding", "casting", "cast", "Moulding and Casting"],

["Output Device", "Outputs Devices", "Outputs Device", "Servo", "motor", "Output Devices"],

["SPI", "UART", "I2C", "RX", "TX", "SCL", "networking week", "networking", "network", "networking and communications", "Embedded Networking and Communications"],

["Interfacing Week", "interface week", "Interface and Application Programming"],

["Wildcard Week"],

["Applications and Implications", "Bill-of-Materials", "Bill of materials"],

["Patent", "copyright", "trademark", "Invention, Intellectual Property and Business Models"],

["Final Project"],

[]

]

# returns file content from a repo

def get_file_content(file_path, project_id, default_branch_name):

print(f"Getting file content: {file_path}, {project_id}")

safe_url = f"https://gitlab.fabcloud.org/api/v4/projects/{project_id}/repository/files/{urllib.parse.quote(file_path, safe='')}?ref={default_branch_name}"

print(safe_url)

response = requests.get(safe_url).json()

if 'message' in response:

if '404' in response['message']:

print(f"404 ERROR from {safe_url}")

return

encrypted_blob = response['content']

decrypted_text = base64.b64decode(encrypted_blob)

return decrypted_text

# get name of GitLab repo

def get_repo_name(project_id):

if save_exists("repo_names", f"{project_id}"):

return load_obj("repo_names", f"{project_id}")

safe_url = f"https://gitlab.fabcloud.org/api/v4/projects/{project_id}"

print(safe_url)

response = requests.get(safe_url).json()

web_url = response['web_url']

if 'message' in response:

if '404' in response['message']:

print(f"404 ERROR from {safe_url}")

return

to_return = response['name'], gitlab_url_to_site_url(f"{web_url}/") # / to make it consistent with the name lists with urljoin and the a's hrefs

save_obj("repo_names", to_return, f"{project_id}")

return to_return

# get a list of the subgroups of a GitLab project

def get_file_repo_list(id):

all_file_paths = []

project = GL.projects.get(id)

all_directories = project.repository_tree(recursive=True, all=True, per_page=200) # pagination bug patched

for item in all_directories:

print(item)

path = item['path']

if path.split('.')[-1].lower().strip() in VALID_EXTENSOINS:

all_file_paths.append(path)

return all_file_paths

# get the IDs of the subgroups of a GitLab project

def get_subgroup_ids(group_id):

safe_url = f"https://gitlab.fabcloud.org/api/v4/groups/{group_id}/subgroups?per_page=999999" # pagination bug patched

response = None

while response is None:

try:

response = requests.get(safe_url).json()

except Exception as e:

print(f"Server timeout- {e}")

time.sleep(10)

p_ids = []

for item in response:

try:

p_ids.append(item['id'])

except:

print("Error finding subgroups -- skipping")

return p_ids

# get the IDs of subgprojects of a GitLab project

def get_subproject_ids(group_id):

safe_url = f"https://gitlab.fabcloud.org/api/v4/groups/{group_id}/projects?per_page=99999" # pagination bug patched

response = requests.get(safe_url).json()

p_ids = []

for item in response:

p_ids.append(item['id'])

return p_ids

def name_split_char(year):

return "." if year < 2021 else " "

# convert a URL to a GitLab repo to a URL to the hosted website

def gitlab_url_to_site_url(gitlab_url):

return f"https://fabacademy.org/{gitlab_url.split('https://gitlab.fabcloud.org/academany/fabacademy/')[-1]}"

# format student's name to create a unique identifier for each student

def format_name(name_url_tup, year, tup=True):

if tup:

name, web_url = name_url_tup

return f'{"-".join(name.lower().strip().split(name_split_char(year)))};{web_url}'

else:

print("Warning: name generated without URL")

name = name_url_tup

return "-".join(name.lower().strip().split(name_split_char(year)))

# remove all links to websites that are not students' repos

def filter_only_student_repos(all_student_repo_ids, all_student_names, year):

filtered_ids = []

all_student_urls = [name.split(";")[1].strip() for name in all_student_names]

for i, id_list in all_student_repo_ids:

for id in id_list:

_, web_url = get_repo_name(id)

if web_url.strip()[:-1] in all_student_urls or web_url.strip() in all_student_urls: # [:-1] to remove ending slash

filtered_ids.append((i, id))

return filtered_ids

# get the repo IDs of all fab Academy students

def get_all_student_repo_ids(year, year_subgroup_id, all_student_names):

if save_exists("student_repo_id_saves", year):

return load_obj("student_repo_id_saves", year)

all_student_repo_ids = []

all_lab_ids = get_subgroup_ids(year_subgroup_id)

for id in all_lab_ids:

for sub_id in get_subgroup_ids(id):

print(sub_id)

all_student_repo_ids.append((id, get_subproject_ids(sub_id))) # ((i, get_subproject_ids(sub_id)))

print("ALL STUDENT REPO IDs", all_student_repo_ids)

print(all_student_repo_ids)

to_return = filter_only_student_repos(all_student_repo_ids, all_student_names, year)

print("FILTERED", to_return)

save_obj("student_repo_id_saves", to_return, year)

return to_return

def save_exists(folder_name, name):

return os.path.exists(f"{folder_name}/{name}.obj")

def load_obj(folder_name, name):

with open(f"{folder_name}/{name}.obj", "rb") as filehandler:

return pickle.load(filehandler)

def save_obj(folder_name, obj, name):

with open(f"{folder_name}/{name}.obj", 'wb') as filehandler:

pickle.dump(obj, filehandler)

# go to fabacademy.org website to find student roster and all students' names and links to their websites

def get_all_people(year):

if save_exists("people_saves", year):

return load_obj("people_saves", year)

base_url = f"https://fabacademy.org/{year}/people.html"

soup = BeautifulSoup(requests.get(base_url).content, 'html.parser')

if year > 2018:

lab_divs = soup.find_all("div", {"class": "lab"})

names = []

for lab_div in lab_divs:

lis = lab_div.find_all("li")

As = [li.find("a") for li in lis]

names += [f"{a.text.strip().lower().replace(' ', '-')};{urljoin(base_url, a['href'])}" for a in As]

else:

lis = soup.find_all("li")

names = [f"{li.find('a').text.strip().lower().replace(' ', '-')};{urljoin(base_url, li.find('a')['href'])}" for li in lis]

save_obj("people_saves", names, year)

return names

# scan a repo for references to another student's documentation

def get_references(content, year, from_url):

pattern = re.compile(f"\/20[0-9][0-9]\/labs\/[^\/]+\/students\/(\w|-)+\/")

people_linked = {}

for match in pattern.finditer(str(content)):

full_url = f"https://fabacademy.org{match.group(0)}"

print(f"FULL URL {full_url}")

person = format_name((match.group(0).split("/")[-2], full_url), year)

link_label = None

topic_search_start_ind = match.start() - CHARACTERS_EACH_DIRECTION_TOPIC_DETECTION

if topic_search_start_ind < 0:

topic_search_start_ind = 0

topic_search_end_ind = match.end() + CHARACTERS_EACH_DIRECTION_TOPIC_DETECTION

if topic_search_end_ind > len(content):

topic_search_end_ind = len(content)

print(f"({match.start()}, {match.end()}) -> ({topic_search_start_ind}, {topic_search_end_ind})")

topic_text = content.decode()[topic_search_start_ind:topic_search_end_ind].lower()

print("TOPIC TEXT", topic_text)

for i in range(len(TOPICS)):

topic = TOPICS[i]

topic_search_terms = TOPIC_SEARCH_TERMS[i]

for item in topic_search_terms:

item_spaces = item.replace("-", " ").replace("/", " ").replace(",", " ").lower().strip()

if re.search(re.compile(item_spaces.replace(" ",".")), topic_text) or re.search(re.compile(item_spaces.replace(" ","-")), topic_text) or re.search(re.compile(item_spaces), topic_text) or re.search(re.compile(item_spaces.replace(" ","")), topic_text):

link_label = topic

if link_label is None:

link_label = TOPICS[classifier.classifyItem(content)]

if person in people_linked:

if link_label in people_linked[person]:

people_linked[person][link_label] += 1

else:

people_linked[person] = {}

people_linked[person][link_label] = 1

return people_linked

# combine students' dictionaries of references to other's websites

def combine_reference_dicts(reference_dict_list):

combined_reference_dict = {}

for dict in reference_dict_list:

for key in dict:

if key in combined_reference_dict:

for topic_key in dict[key]:

if topic_key in combined_reference_dict[key]:

combined_reference_dict[key][topic_key] += dict[key][topic_key]

else:

combined_reference_dict[key][topic_key] = dict[key][topic_key]

else:

combined_reference_dict[key] = dict[key]

return combined_reference_dict

# convert data to Pandas crosstab matrix, now including subject-area

def format_data_to_matrix(data):

students = []

for year_info in data: # [(lab_id: (int), {"Student Name": {"student-referenced": num_references (int), ...}}), ...]

for student_info in year_info: # (lab_id: (int), {"Student Name": {"student-referenced": num_references (int), ...}})

lab_id = student_info[0]

student_name = list(student_info[1].keys())[0]

reference_dict = student_info[1][list(student_info[1].keys())[0]]

students.append(student_name)

students_links = [X.split(";")[1] for X in students]

link_student_dict = {}

for i in range(len(students)):

link_student_dict[students_links[i]] = students[i]

print("STUDENTS", students)

df = pd.crosstab(students, students)

df.rename_axis("Referencing Students", axis=0, inplace=True)

df.rename_axis("Referenced Students", axis=1, inplace=True)

df = pd.DataFrame(df, index=df.index, columns=pd.MultiIndex.from_product([df.columns, TOPICS]))

for student1 in students:

for student2 in students:

for topic_name in TOPICS:

df.at[student1, (student2, topic_name)] = (pd.NA if student1 == student2 else 0)

def assign_value(referencer_student, referenced_student, num_references, topic):

if referencer_student == referenced_student:

print(f"Ignoring self-referenced student {referencer_student}")

elif referenced_student not in students:

if referenced_student.split(";")[1] in students_links:

assign_value(referencer_student, link_student_dict[referenced_student.split(";")[1]], num_references, topic)

else:

print("Referenced student isn't a student - URL matched naming convention so regex caught but wasn't checked against student list - skipping")

else:

df.loc[referencer_student, (referenced_student, topic)] = num_references

def get_value(referencer_student, referenced_student, topic):

return df.loc[referencer_student, (referenced_student, topic)]

for year_info in data:

for student_info in year_info:

student_name = list(student_info[1].keys())[0]

reference_dict = dict(student_info[1][student_name])

for referenced_student in reference_dict:

for reference_type in reference_dict[referenced_student]:

num_references = reference_dict[referenced_student][reference_type]

assign_value(student_name, referenced_student, num_references, reference_type)

df.to_csv("final_data.csv", index_label="Referencing Students|Referenced Students")

return df

# get all of the reference dictionaries of different students for a given year

def get_all_reference_dicts(year, id):

filename = f"{year}-{id}"

if save_exists("reference_dict_saves", filename):

print(f"Save exists! {load_obj('reference_dict_saves', filename)}")

return load_obj("reference_dict_saves", filename)

reference_dict_list = []

default_branch_name_response = requests.get(f"https://gitlab.fabcloud.org/api/v4/projects/{id}/repository/branches").json()

default_branch_name = None

for branch in default_branch_name_response:

if branch['default']:

default_branch_name = branch['name']

if default_branch_name is None:

print(f"Error: Default Branch Not Found (id: {id}) {default_branch_name_response} - leaving as None")

try:

for file in get_file_repo_list(id):

print(f"Checking {file}...")

reference_dict_list.append(get_references(get_file_content(file, id, default_branch_name), year, get_repo_name(id)[1]))

print("Generating compiled reference dictionary...")

compiled_reference_dict = combine_reference_dicts(reference_dict_list)

print(compiled_reference_dict)

save_obj("reference_dict_saves", compiled_reference_dict, filename)

print(f"SAVING to {filename}")

return compiled_reference_dict

except gitlab.exceptions.GitlabGetError as e:

print("Error, returning combined reference dicts:", e)

return combine_reference_dicts(reference_dict_list)

def get_people_soup(year):

filename = f"{year}-soup"

if save_exists("people_saves", filename):

return load_obj("people_saves", filename)

base_url = f"https://fabacademy.org/{year}/people.html"

soup = BeautifulSoup(requests.get(base_url).content, 'html.parser')

save_obj("people_saves", soup, filename)

return soup

def repo_name_to_student_name(name_and_web_url_tup):

name, web_url = name_and_web_url_tup

year = web_url.split("/")[3]

href = f'/{"/".join(web_url.split("/")[3:])}'

people_soup = get_people_soup(year)

As = people_soup.find_all('a', href=True)

a = [_ for _ in As if _['href'] == href or _['href'] == href[:-1]][0] # or to account for ending slash

name_final = a.text.strip()

return name_final, web_url

if __name__ == "__main__":

classifier = Classifier()

reference_dicts_across_years = [] # [[(lab_id: (int), {"Student Name": {"student-referenced": num_references (int), ...}}), ...], ...]

for year in range(2018, 2024):

print("Loading student names...")

all_student_names = get_all_people(year)

print(all_student_names)

print("Collecting student repo IDs...")

all_student_repo_ids = get_all_student_repo_ids(year, ALL_LAB_SUBGROUP_IDS[year], all_student_names)

print(all_student_repo_ids)

all_reference_dicts = [] # [(lab_id: (int), {"Student Name": {"student-referenced": num_references (int), ...}}), ...]

for lab_number, id in all_student_repo_ids:

"""temp_i += 1

if temp_i > 3:

break"""

reference_dict_list = []

compiled_reference_dict = get_all_reference_dicts(year, id)

print(compiled_reference_dict)

print("Adding reference dictionary to database...")

all_reference_dicts.append((lab_number, {format_name(get_repo_name(id), year): compiled_reference_dict}))

print(f"All reference dictionaries so far... {all_reference_dicts}")

reference_dicts_across_years.append(all_reference_dicts)

matrix = format_data_to_matrix(reference_dicts_across_years)

Network Analysis

Code

Download the network analysis code here!

density.py calculates the global network density.

density.py

import pandas as pd

import pickle, json

import networkx as nx

with open("final_data.json", "rb") as file:

final_data = json.load(file)

G = nx.DiGraph()

# Add nodes to the graph

for node in final_data["nodes"]:

G.add_node(node["id"])

# Add edges (links) to the graph

for link in final_data["links"]:

G.add_edge(link["source"], link["target"], weight=link["value"], topic=link["topic"])

# Calculate density

density = nx.density(G)

with open("density.obj","wb") as file:

pickle.dump(density, file)

print(density)

density_by_lab.py creates a data table of the network density of each lab.

density_by_lab.py

import pandas as pd

import pickle, json

import networkx as nx

import matplotlib.pyplot as plt

lab_names_urls = ['aachen', 'aalto', 'agrilab', 'akgec', 'akureyri', 'algarve', 'bahrain', 'bangalore', 'barcelona', 'benfica', 'berytech', 'bhubaneswar', 'bhutan', 'boldseoul', 'bottrop', 'brighton', 'cept', 'chaihuo', 'chandigarh', 'charlotte', 'cidi', 'cit', 'ciudadmexico', 'cpcc', 'crunchlab', 'dassault', 'deusto', 'dhahran', 'digiscope', 'dilijan', 'ecae', 'echofab', 'ecostudio', 'egypt', 'energylab', 'esan', 'esne', 'fablabaachen', 'fablabaalto', 'fablabakgec', 'fablabamsterdam', 'fablabat3flo', 'fablabbahrain', 'fablabbeijing', 'fablabberytech', 'fablabbottrop', 'fablabbrighton', 'fablabcept', 'fablabcharlottelatin', 'fablabdassault', 'fablabdigiscope', 'fablabechofab', 'fablabecostudio', 'fablabegypt', 'fablaberfindergarden', 'fablabesan', 'fablabfacens', 'fablabfct', 'fablabgearbox', 'fablabincitefocus', 'fablabirbid', 'fablabisafjorour', 'fablabkamakura', 'fablabkamplintfort', 'fablabkhairpur', 'fablabkochi', 'fablabkromlaboro', 'fablablccc', 'fablableon', 'fablabmadridceu', 'fablabmexico', 'fablabodessa', 'fablabopendot', 'fablaboshanghai', 'fablaboulu', 'fablabpuebla', 'fablabreykjavik', 'fablabrwanda', 'fablabsantiago', 'fablabseoul', 'fablabseoulinnovation', 'fablabsiena', 'fablabsocom', 'fablabsorbonne', 'fablabspinderihallerne', 'fablabszoil', 'fablabtechworks', 'fablabtecsup', 'fablabtembisa', 'fablabtrivandrum', 'fablabuae', 'fablabulb', 'fablabutec', 'fablabvigyanasharm', 'fablabwgtn', 'fablabyachay', 'fablabyucatan', 'fablabzoi', 'falabdeusto', 'falabvestmannaeyjar', 'farmlabalgarve', 'fct', 'formshop', 'hkispace', 'ied', 'incitefocus', 'ingegno', 'inphb', 'insper', 'ioannina', 'irbid', 'isafjordur', 'jubail', 'kamakura', 'kamplintfort', 'kannai', 'kaust', 'keolab', 'khairpur', 'kitakagaya', 'kochi', 'lakazlab', 'lamachinerie', 'lccc', 'leon', 'libya', 'lima', 'napoli', 'newcairo', 'ningbo', 'opendot', 'oshanghai', 'oulu', 'plusx', 'polytech', 'puebla', 'qbic', 'reykjavik', 'riidl', 'rwanda', 'santachiara', 'sedi', 'seoul', 'seoulinnovation', 'singapore', 'sorbonne', 'stjude', 'szoil', 'taipei', 'talents', 'techworks', 'tecsup', 'tecsupaqp', 'tianhelab', 'tinkerers', 'trivandrum', 'twarda', 'uae', 'ucal', 'ucontinental', 'uemadrid', 'ulb', 'ulima', 'utec', 'vancouver', 'vestmannaeyjar', 'vigyanashram', 'waag', 'wheaton', 'winam', 'yucatan', 'zoi']

MIN_STUDENTS = 5

labs_by_continent = {

"vigyanashram":"Asia", # India

"oulu":"Europe", # Finland

"kamplintfort":"Europe", # Germany

"charlotte":"North America", # USA (Assumed)

"lccc":"North America", # USA (Assumed)

"bahrain":"Asia", # Bahrain

"uae":"Asia", # United Arab Emirates

"libya":"Africa", # Libya

"techworks":"North America", # USA (Assumed)

"newcairo":"Africa", # Egypt

"egypt":"Africa", # Egypt

"lakazlab":"Africa", # Mauritius (Assumed)

"tecsup":"South America", # Peru

"wheaton":"North America", # USA (Assumed)

"fablabuae":"Asia", # United Arab Emirates

"qbic":"Asia", # Qatar (Assumed)

"kochi":"Asia", # India

"ied":"Europe", # Italy (Assumed)

"fablabtrivandrum":"Asia", # India

"fablabakgec":"Asia", # India

"barcelona":"Europe", # Spain

"fablabsorbonne":"Europe", # France

"fablabcept":"Asia", # India

"rwanda":"Africa", # Rwanda

"leon":"Europe", # Spain (Assumed)

"lamachinerie":"Europe", # France (Assumed)

"fablabdigiscope":"Europe", # France (Assumed)

"energylab":"Europe", # Denmark (Assumed)

"akgec":"Asia", # India

"irbid":"Asia", # Jordan

"reykjavik":"Europe", # Iceland

"sorbonne":"Europe", # France

"incitefocus":"North America", # USA (Assumed)

"puebla":"North America", # Mexico

"tecsupaqp":"South America", # Peru

"ucontinental":"South America", # Peru

"fablabopendot":"Europe", # Italy

"santachiara":"Europe", # Italy

"fablabechofab":"North America", # Canada

"zoi":"Asia", # China (Assumed)

"cidi":"North America", # USA (Assumed)

"dassault":"Europe", # France (Assumed)

"stjude":"North America", # USA (Assumed)

"aalto":"Europe", # Finland

"fablabzoi":"Asia", # China (Assumed)

"ecae":"Asia", # United Arab Emirates